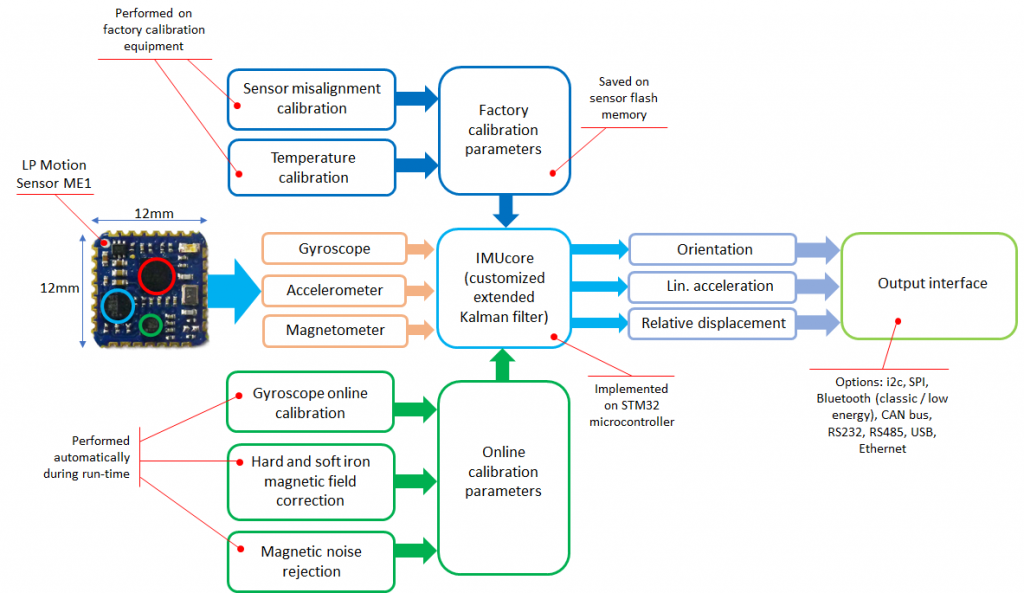

IMUcore Customizable Sensor Fusion Solution

Introducing IMUcore

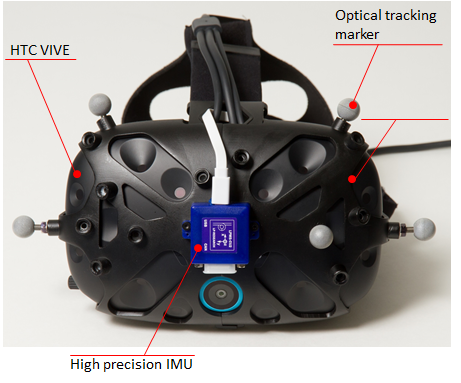

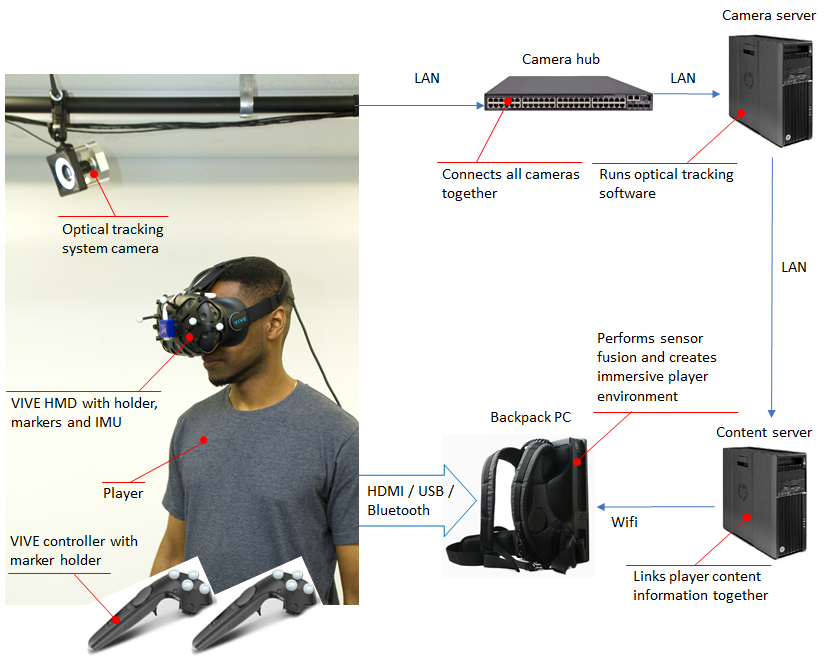

IMUcore customizable sensor fusion solution is the central algorithm working inside all LP-RESEARCH IMUs. It collects orientation data from several sources and combines them into fast and drift-free tilt and direction information. To work with any type of MEMS sensor, various online and offline calibration methods have been implemented to guarantee high quality data output. The algorithm is very versatile and performance-saving. It can be implemented on embedded MCUs with minimum power consumption.

IMUcore is now available as a solution from LP-RESEARCH. Please contact us here for more information or a price quotation.

Sensor Fusion Filter Overview

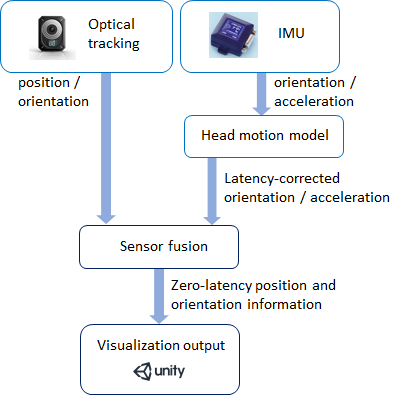

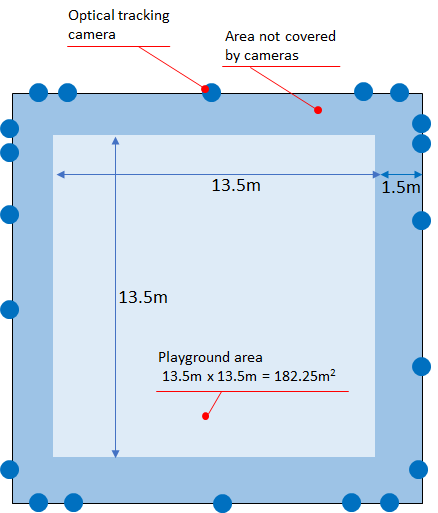

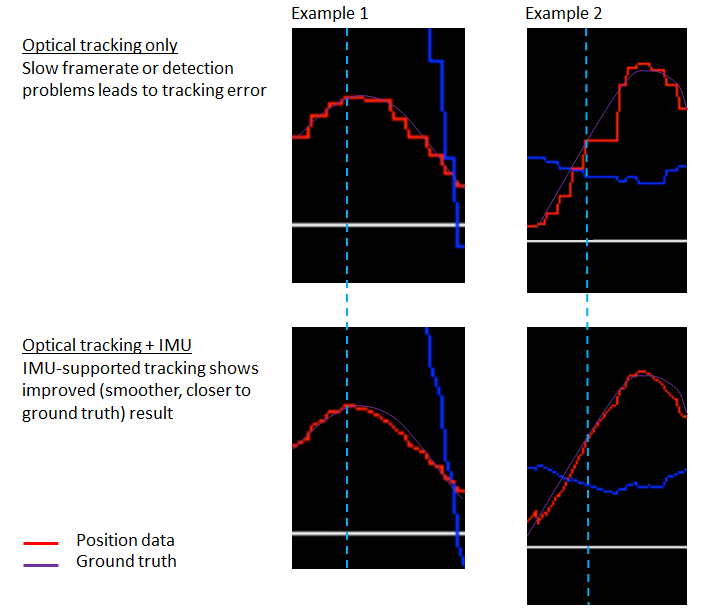

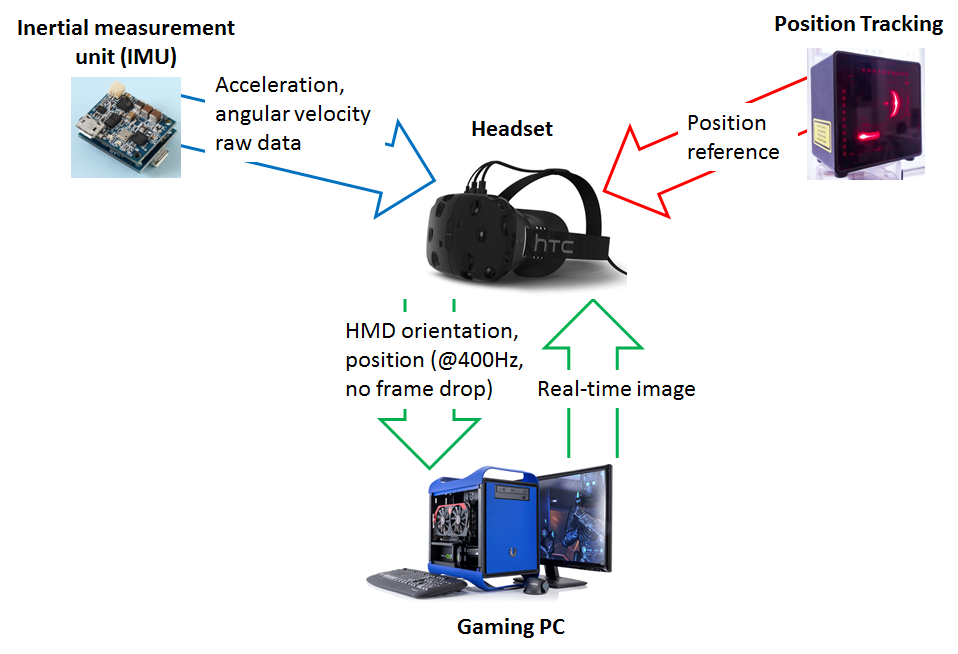

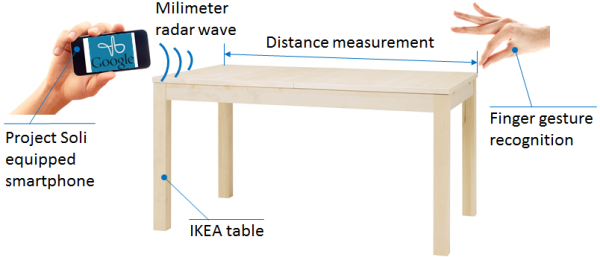

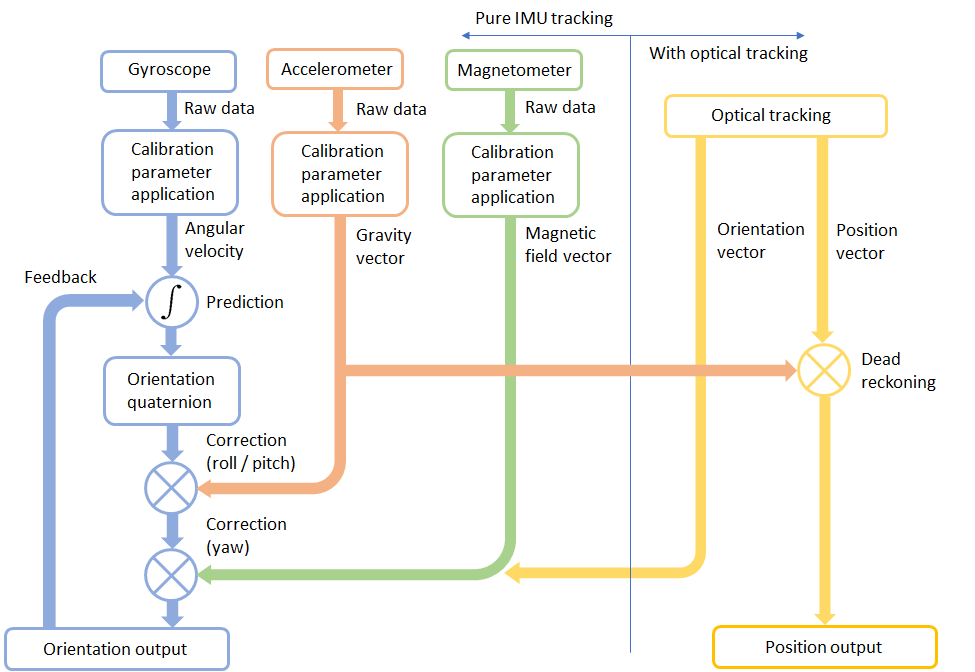

The IMUcore customizable sensor fusion solution uses gyroscope data as basis information to calculate orientation. Errors introduced through measurement noise are corrected by accelerometer and compass data. Optionally the sensor fusion can be extended with an optical (or other) tracking system to additionally provide position information. You can see some examples of the application of IMUcore in our sensor devices here.

If this topic sounds familiar to you and you are looking for a solution to a related problem, contact us for further discussion.