Collaboration with Pimax

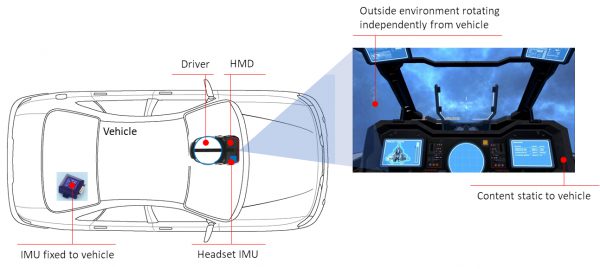

We are happy to announce a collaboration with the head-mounted display (HMD) manufacturer Pimax. Pimax HMDs feature very high resolution (up to 8K pixels) displays and an industry-leading field-of-view (max. 200°). By default, Pimax HMDs support SteamVR tracking and therefore are limited to relatively small tracking volumes.

We developed a special driver that allows our LPVR middleware LPVR-CAD and LPVR-DUO to work with Pimax headsets. Using LPVR, the headsets can now be used within a large-scale, location-based context, in connection with outside-in optical systems such as ART (Advanced Real-Time Tracking).

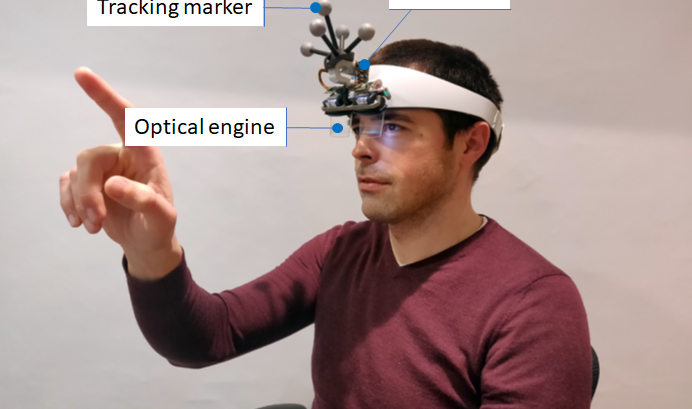

As Pimax is planning to implement UltraLeap hand tracking in their HMDs in the future, we are confident that we will also be able to extend our inside-out tracking algorithm to their devices.

The video above shows the basic functionality of tracking a Pimax HMD using LPVR and an optical tracking system. The headset’s motions are represented in SteamVR. For this demonstration the tracking volume is relatively small, but can be extended easily by using more outside-in tracking cameras.

This video was kindly provided to us by evoTec Solutions. Evotec is a new company in Switzerland that focuses on virtual reality (VR) solutions for corporations. Contact them for further information!