IMU-based Dead Reckoning (Displacement Tracking) Revisited

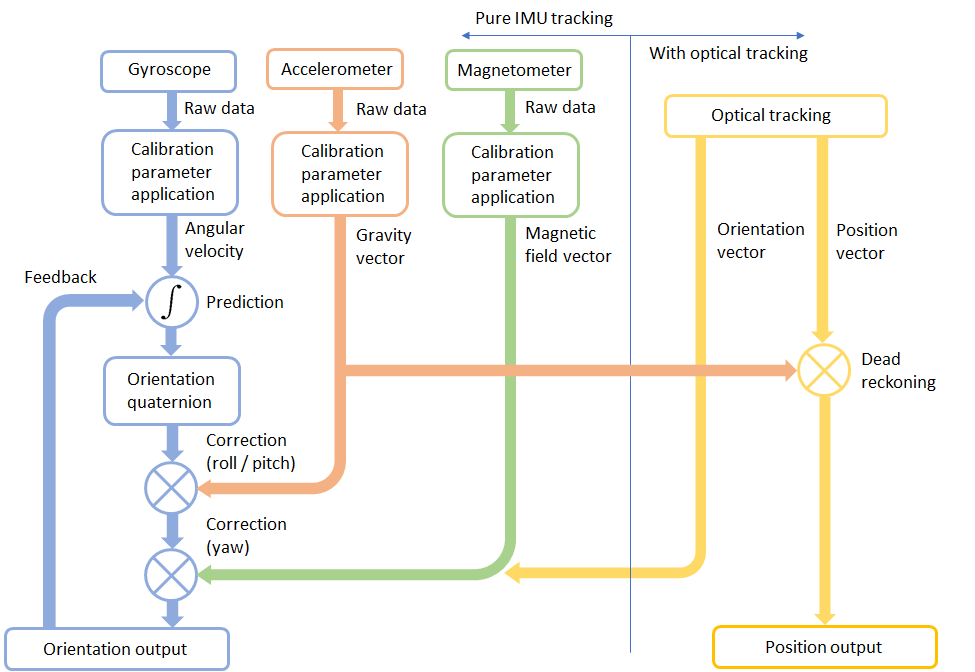

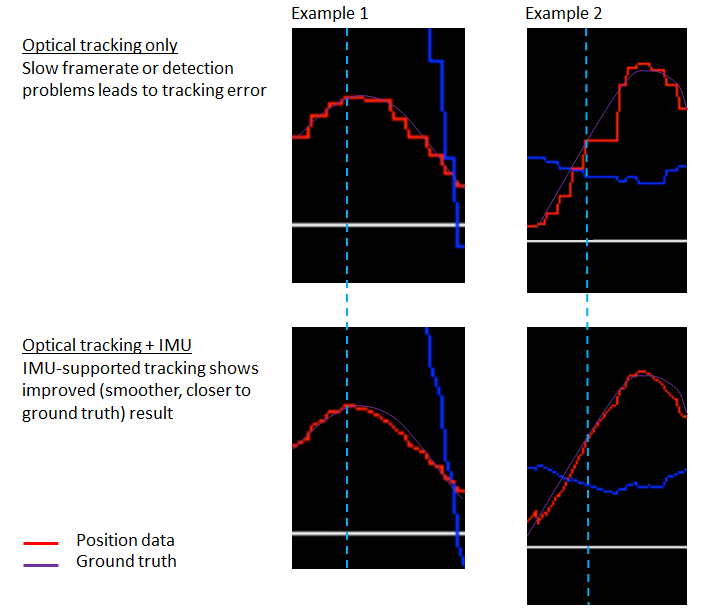

In a blog post a few years ago, we published results of our experiments with direct integration of linear acceleration from our LPMS-B IMU. At that time, although we were able to process data in close to real-time, displacement tracking only worked on one axis and for very regular up-and-down motions.

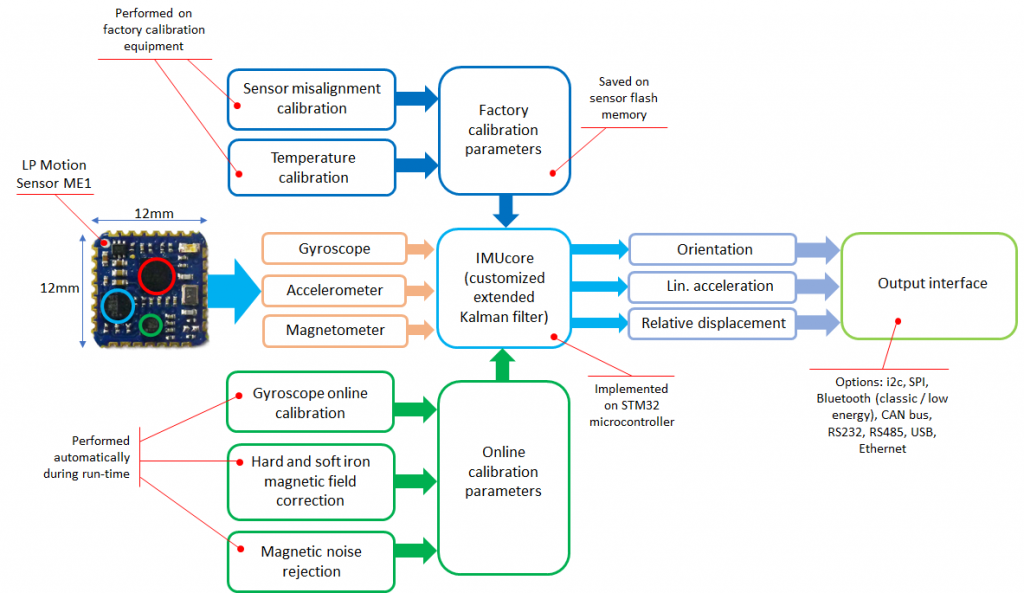

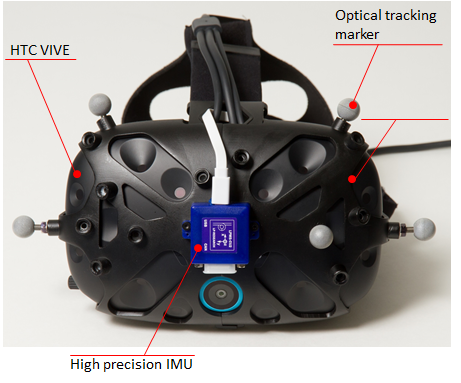

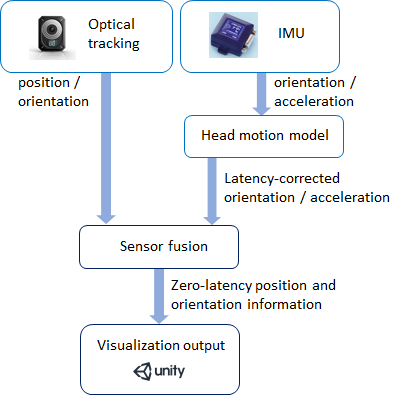

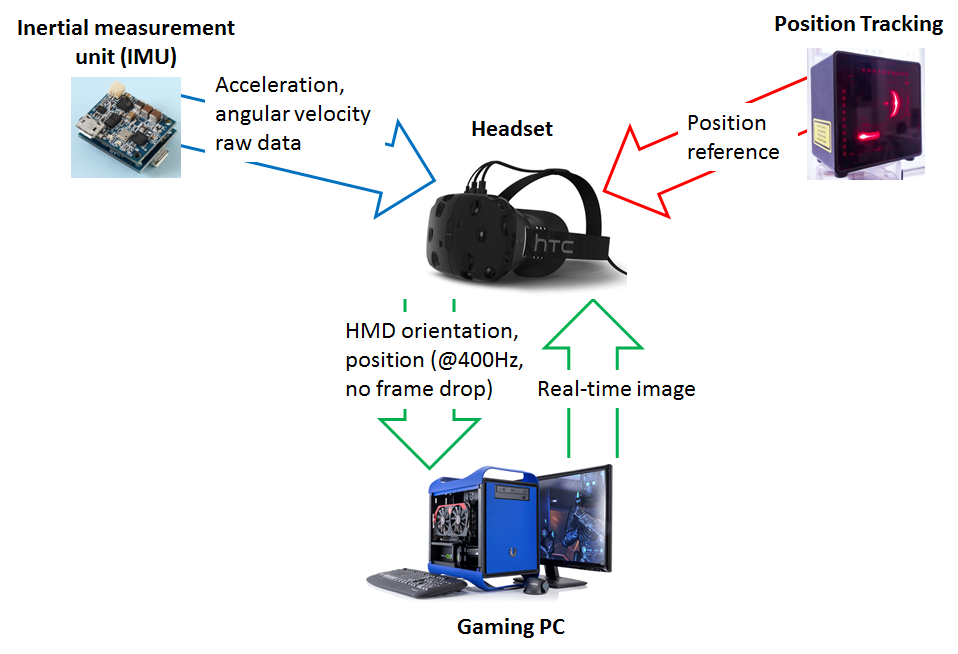

In the meantime the measurement quality of our IMUs has improved and we have put further work into researching dead reckoning applications. Fact is that still, low-cost MEMS as they are used in our LPMS-B2 devices are not suitable to perform displacement measurement for extended periods of time or with great accuracy. But, for some applications such as sports motion measurement or as one component in a larger sensor fusion setup, the results are very promising.

A further experiment shows this algorithm applied to the evaluation of boxing motions. This system might work as a base component for IoT boxing gloves that allow automatic evaluation of an athletes technique and strength, or it might ne integrated into an advanced controller for virtual reality sports.

As usual, please contact us for further information.