Accurate Mixed Reality with LPVR-CAD and Varjo XR-3

Anchoring Virtual Objects with Varjo XR-3

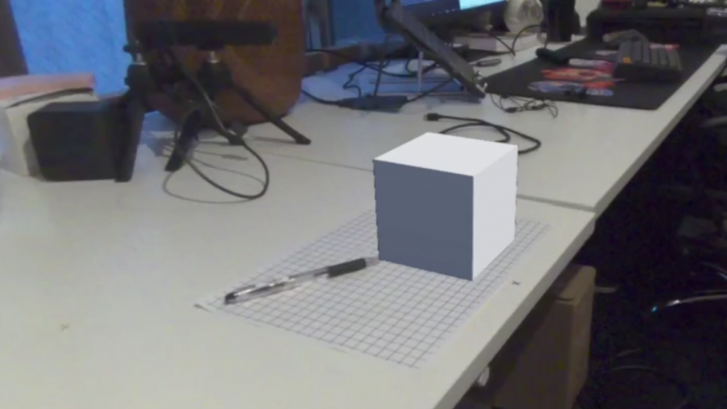

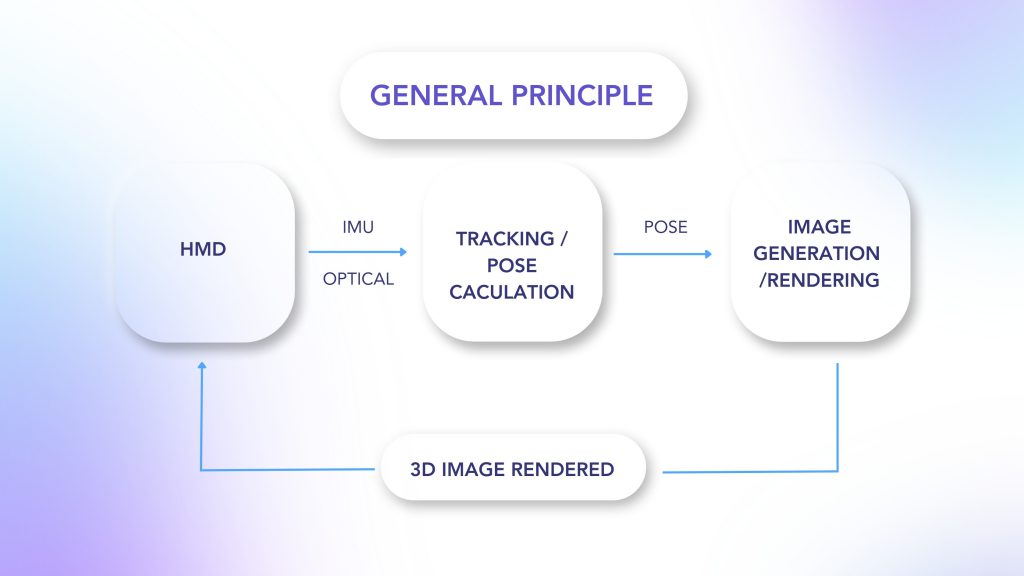

A key aspect of making a mixed reality experience compelling and useful is to correctly anchor virtual content to the real world. An object that’s fixed in its position and orientation (pose) relative to the real world should not change its pose as seen by the user when they’re moving around in the scene wearing the headset. Imagine a simple virtual cube positioned to be sitting on a real table. As the user walks around, this cube should remain in place, regardless from which perspective the user looks at it.

In more detail, correct anchoring of virtual objects to reality depends on the following points:

- In order to create correctly aligned content in a mixed reality experience, accurate knowledge of the user’s head pose is essential. We calculate the head pose using LPVR-CAD.

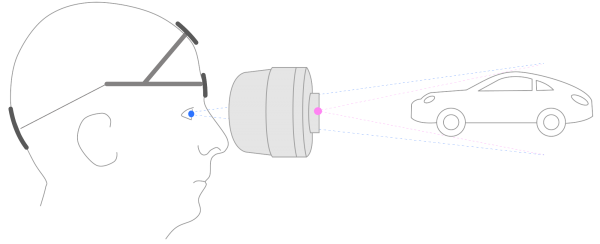

- The field of view provided by the cameras and the natural field of view of the human eyes are very different. Appropriate calibration is needed to compensate for this effect. The illustration below shows this inherent problem of video pass-through very clearly. Varjo’s HMDs are factory calibrated to minimize the impact of this effect on the user experience.

To get a better idea how a correct optical see-through (OST) calibration influences mixed reality performance we recommend playing with the camera configuration options in Varjo Lab Tools.

– Image credit: Varjo mixed reality documentation

Functional Testing of MR Performance

Our LPVR solution must at the very least achieve a precision that is satisfactory for our users’ typical applications. Therefore we decided to do a series of experiments to evaluate the precision of our system for mixed reality experiences and how it compares with SteamVR Lighthouse tracking.

We looked at the following configurations to make our evaluation:

| # | HMD | Engine | Tracking system |

|---|---|---|---|

| 1 | Varjo XR-3 | Unreal 5.2 | LPVR-CAD |

| 2 | Varjo XR-3 | Unreal 5.2 | Lighthouse |

1 – LPVR Tracking System

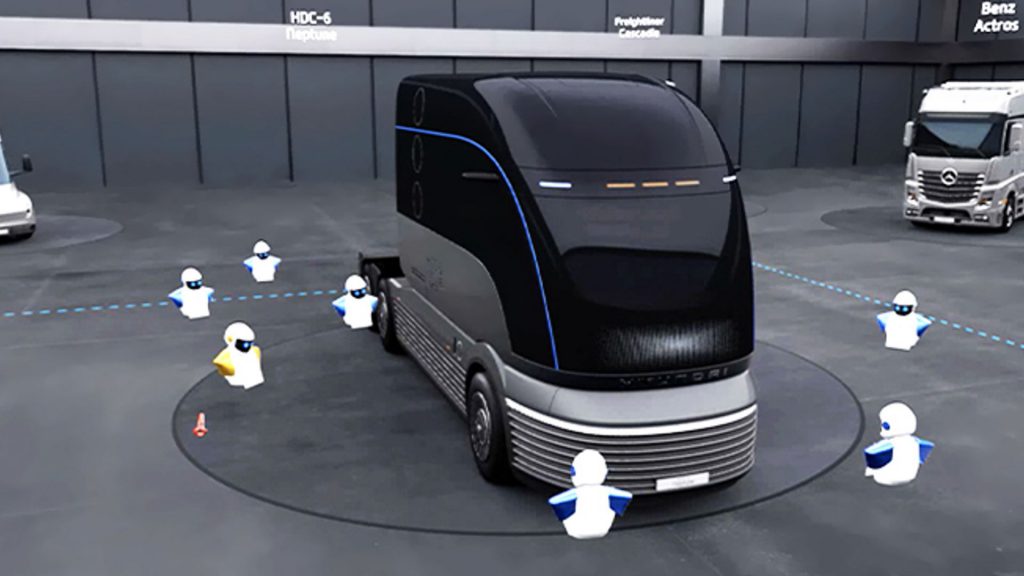

In this scenario we fixed a simple virtual cube on a table top in a defined position and orientation. The cube is the only virtual object in this scenario, everything else is the passed-through live video feed from the HMD cameras. We are tracking the HMD using LPVR-CAD in connection with an ART Smarttrack 3. No markers are used to stabilize the pose of the cube.

Important note: The tracking performance for the mixed reality use case can significantly change if the marker target that is attached to the HMD is not correctly adjusted. Please refer to the LPVR-CAD documentation or contact us for further support.

2 – Lighthouse Tracking System

This scenario is in its basic setup identical to scenario #1, except that we’re using Lighthouse tracking to find the pose of the HMD.

Conclusion

See a table with our preliminary findings below:

| LPVR Tracking | Lighthouse Tracking |

|---|---|

| 2 | 2.5 |

| 1.5 | 1.5 |

– Approximate displacement error on horizontal plane (in cm)

Using the same room setup and test scene, the mixed reality accuracy of LPVR-CAD and Lighthouse tracking is similar. With both tracking systems slight shifts of 1-2cm depending on the head movement can be observed. Good results with LPVR tracking require precise adjustment of the optical target attached to the headset.

Note that our method of estimating the displacement error is rather qualitative than quantitative. With this post, we made a general comparison of LPVR and Lighthouse tracking. A more quantitatively accurate evaluation will follow.

For customers wanting to reduce as much drift as possible, we recommend the use of optical tracking. There may be different results using Varjo XR-4 and other variations on the tracking environment or displayed content, which could warrant further testing in the future.

*We provide complete solutions with cutting-edge tracking systems and content for a variety of headsets. For detailed information on supported HMD models, please reach out to us.