Introduction

In the past years our team at LP-RESEARCH has worked on a number of customer projects that required us to customize our sensor fusion algorithms for a specific application.

Such customization usually doesn’t include significant changes to our existing filter algorithms, but in most cases only warrants changes to input/output interfacing, specialized extensions to the core functionality.

Our own products such as the LPMS inertial measurement units, the LPVR virtual and augmented reality tracking system series and LPVIZ are based to a large extent on the same fundamental algorithms, but with different sensor inputs and different data output interfaces.

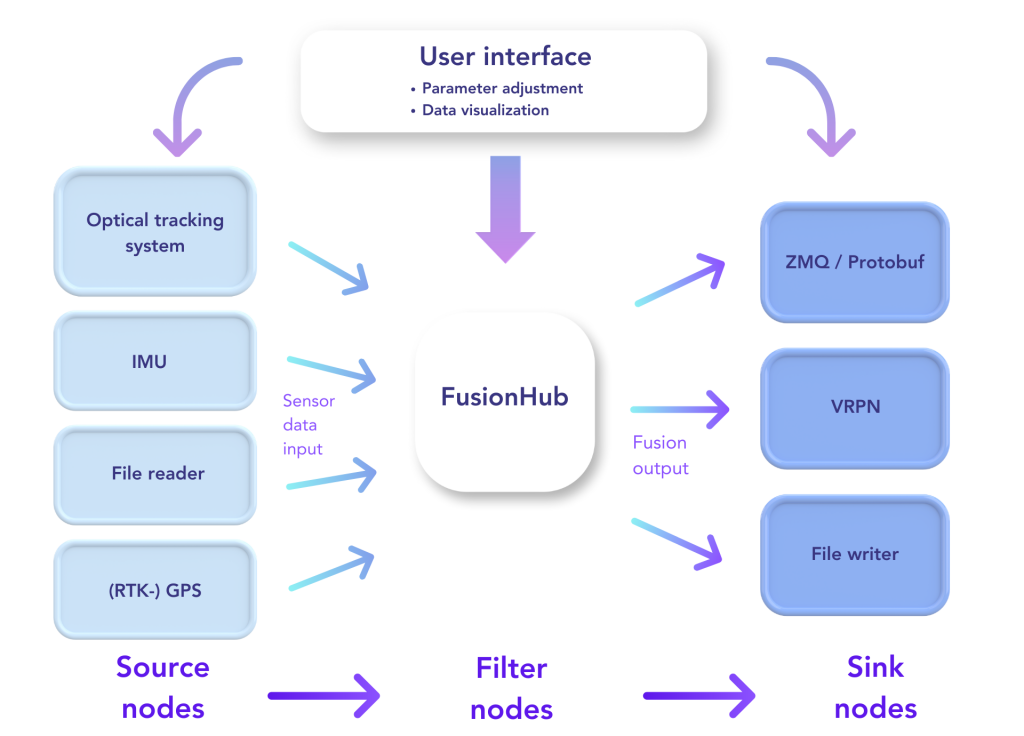

For this reason we decided to develop a modular platform that would allow us to create a graph of nodes, each node with a specific functionality as either:

- Sensor data source (Gyroscope source, GPS etc.)

- Filter algorithm (IMU-optical filter, odometry-GPS filter etc.)

- Output sinks (File writer, websocket output, VRPN output etc.)

Modular Platform

This modular platform we call the FusionHub or sensor fusion operating system (SFOS) as it creates an end-to-end solution for applications that need a high performance and flexible sensor input/output and filtering system. FusionHub is platform independent and can run on Windows, Linux and Android. We are also working on a port to Apple’s VisionOS.

One might argue that a very simlar solution exists in the form of the Robot Operating System (ROS). ROS is a powerful platform, but it is relatively large in the amount of base components that it needs to run.

With FusionHub, we rely on the ZeroMQ protocol and Google’s Protocol Buffers for the communication of our nodes. This keeps the application light weight and independent from any other software stack or platform.

Apart from proprietary external libraries that might be needed to connect to certain sensor systems, FusionHub is a standalone application. It easily runs even on embedded hardware systems such as the Meta Quest virtual reality display series.

Easy Configuration

The components of a FusionHub graph are configured using a simple JSON script. For example a sensor input from one of our LPMS-IG1 IMUs is configured as shown below:

"imu": {

"outEndpoint": "inproc://imu_data_source",

"settings": {

"autodetectType": "ig1"

},

"type": "OpenZen"

}

An IMU-optical sensor fusion node would be configured similar to the below script:

"fusion": {

"dataEndpoint": "inproc://fusion_output",

"inputEndpoints": [

"inproc://optical_data_source",

"inproc://imu_data_source"

],

"settings": {

"SensorFusion": {

"alignment": {

"w": 0.464394368797396,

"x": -0.545272191516426,

"y": 0.5236543865322006,

"z": 0.46130487841960544

},

"orientationWeight": 0.005,

"predictionInterval": 0.01,

"sggPointsEachSide": 5,

"sggPolynomialOrder": 5,

"tiltCorrection": null,

"yawWeight": 0.01

}

},

"type": "ImuOpticalFusion"

}

Each node contains input and output endpoints, or one of the two for source and sink nodes, that allow other nodes to connect to them. Connections can be limited to inside the application (“inproc://..”) or also be accessible from outside the application (“tcp://..”). This allows for constructing node networks that run on distributed machines. For example one computer could acquire data from a specific sensor and another computer could apply a sensor fusion algorithm to the incoming data.

This is also a simple way how additional nodes developed by 3rd parties can communicate with or via the FusionHub.

We have so far created the follwing node components:

Inputs

- LPMS input node

- Optical tracking system input node (ART, Optitrack, VICON, Antilatency)

- (RTK-)GPS input nodes (NMEA, RTCM)

- Car odometry via CAN bus input node

Outputs

- File writer node

- VRPN output node

- Websocket output node

Filters

- IMU-optical fusion node (for LPVR systems)

- GPS-odometry fusion node (for car navigation)

- RTK-GPS-odoemtry-IMU fusion node (for car navigation)

Future Outlook

With great success we’ve deployed FusionHub for our own products as well as several customer projects.

While our collection of node components and the range of applications for FusionHub is growing, we spend significant development resources on creating testing frameworks that guarantee the performance and robustness of FusionHub. As the application of FusionHub in our LPVIZ driving assistance system is mission critical, reliability and redundancy of our core framework is essential.

In the future we’re looking to connect FusionHub with our internal IoT solution LPIOT to open up access to our core algorithm to large numbers of sensor devices in parallel. Furthermore we are working with machine learning-focused company Archetype AI to give deeper inteligence to this solution.

See further information about how to use FusionHub in the FusionHub documentation.