Machine Learning for Context Analysis

Deterministic Analysis vs. Machine Learning

Machine learning and artificial intelligence (AI) are important methods that allow machines to classify information about their environment. Today’s smart devices integrate an array of sensors that constantly measure and save data. On the first thought one would image that the more data is available, the easier it is to draw conlusions from this information. But, in fact larger amounts of data become harder to analyze using deterministic methods (e.g. thresholding). Whereas such methods by themselves can work efficiently, it is difficult to decide which analysis parameters to apply to which parts of the data.

Using machine learning techniques on the other hand this procedure of finding the right parameters can be greatly simplified. By teaching an algorithm which data corresponds to a certain outcome using training and verification data, analysis parameters can be determined automatically or at least semi-automatically. There exists a wide range of machine learning algorithms including the currently very popular convolutional neural networks.

Context Analysis

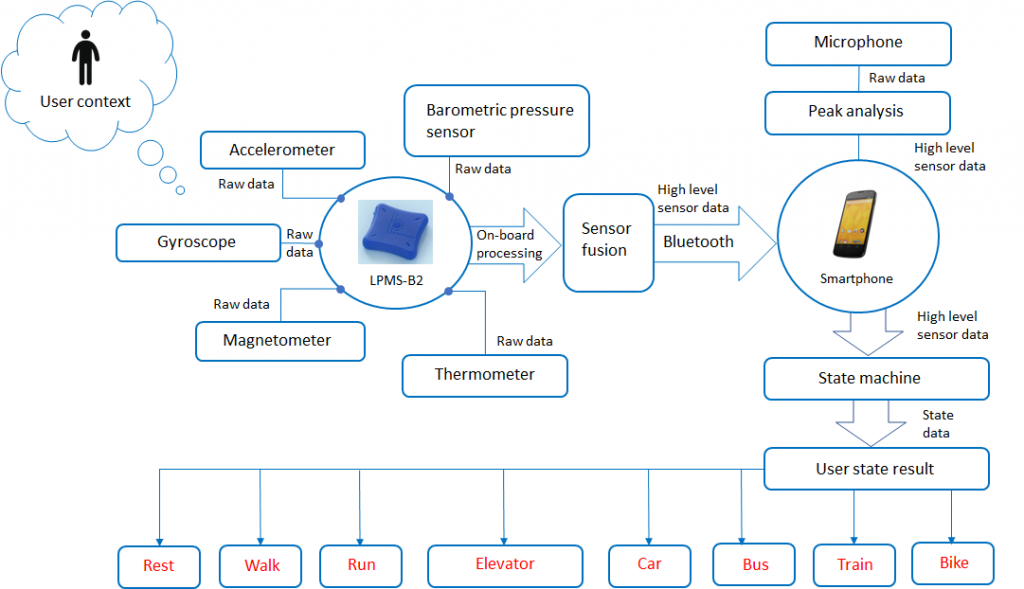

Many health care applications rely on the correct classification of a user’s daily activities, as these reflect strongly his lifestyle and possibly involved health risks. One way of detecting human activity is monitoring their body motion using motion sensors such as gyroscopes, accelerometers etc. In the application described here we monitor a person’s mode of transportation, specifically

- Rest

- Walking

- Running

- In car

- On train

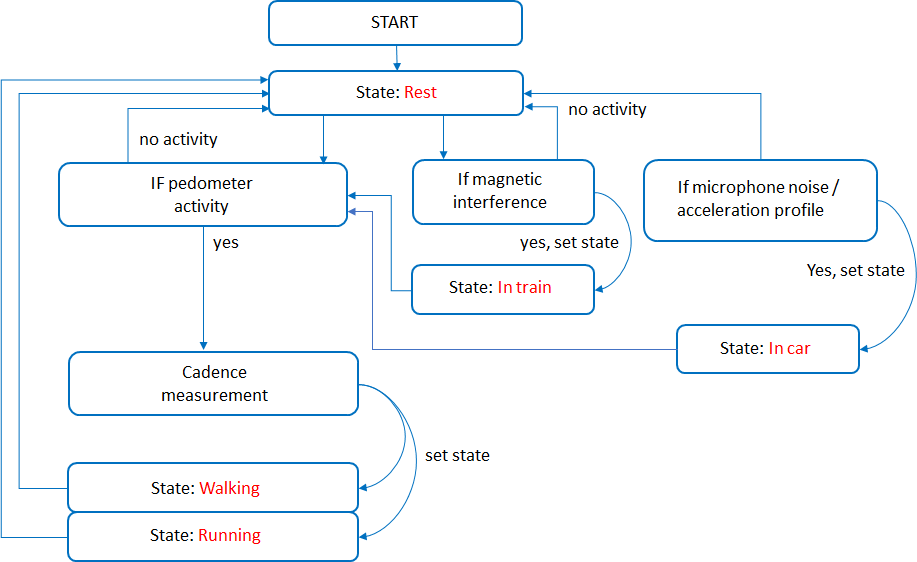

To illustrate the results for deterministic analysis vs. machine learning approach we first implemented a state machine based on deterministic analysis parameters.

The result is a relatively complicated state machine that needs to be very carefully tuned. This might have been because of our lack of patience, but in spite of our best efforts we were not able to reach detection accuracies of more than around 60%. Before spending a lot more time on manual tuning of this algorithm we switched to a machine learning approach.

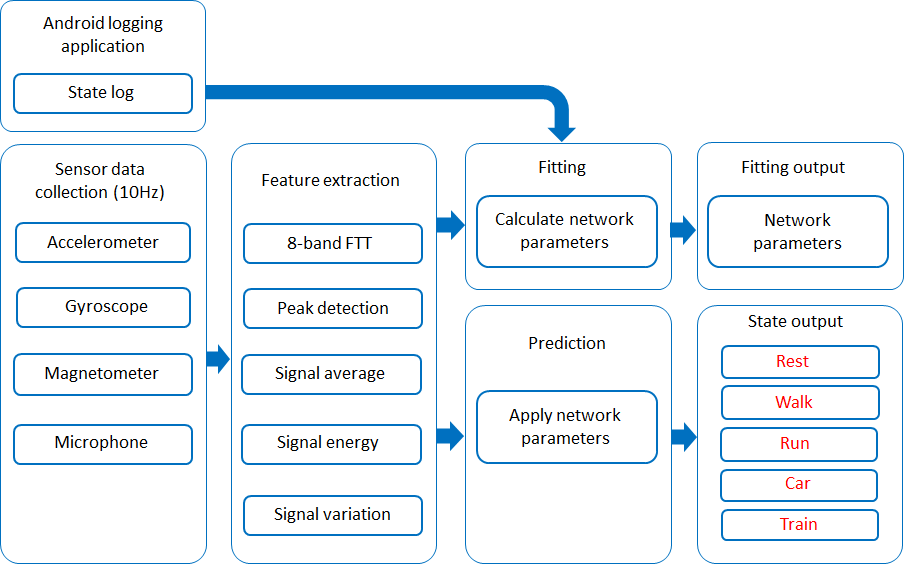

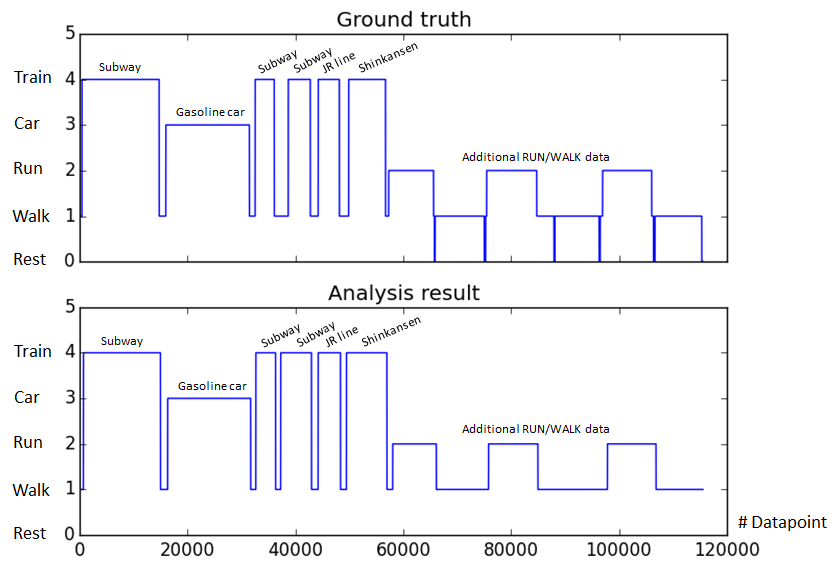

The eventual system structure looks noticeably simpler than the deterministic state machine. Besides standard feature extraction, a central part of the algorithm is the data logging and training module. We sampled over 1 milion of training samples to generate the parameters for our detection network. As a a result, even though we used a relatively simple machine learning algorithm, we were able to reach a detection accuracy of more than 90%. A comparison between ground truth data and classification results from raw data is displayed below.

Conclusion

We strongly belief in the use of machine learning / AI techniques for sensor data classification. In combination with LP-RESEARCH sensor fusion algorithms, these methods add a further layer of insight for our data anlysis customers.

If this topic sounds familiar to you and you are looking for a solution to a related problem, contact us for further discussion.