Location-based VR Tracking for All SteamVR Applications

UPDATE 1 – LPVR now offers VIVE Pro support!

UPDATE 2 – LPVR can now talk to all optical tracking systems that support VRPN (VICON, ART etc.).

UPDATE 3 – Of special interest to automotive customers may be that this also supports Autodesk VRED.

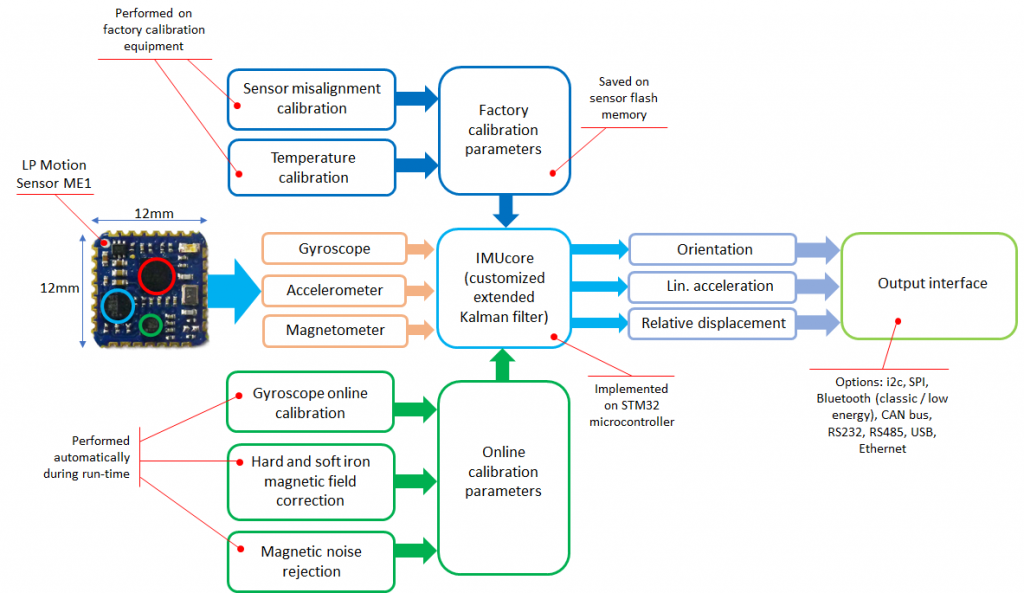

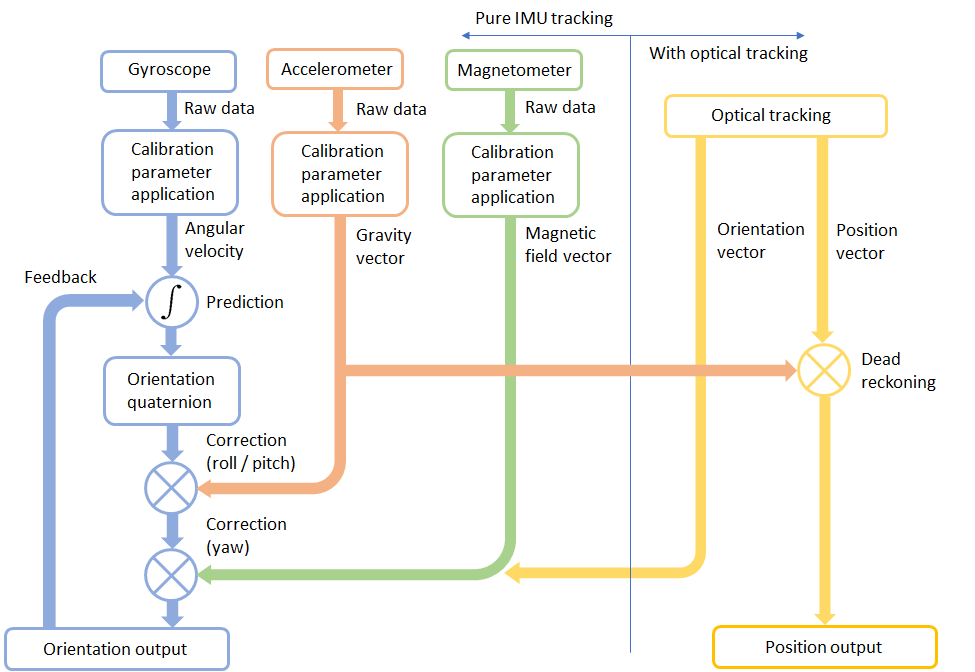

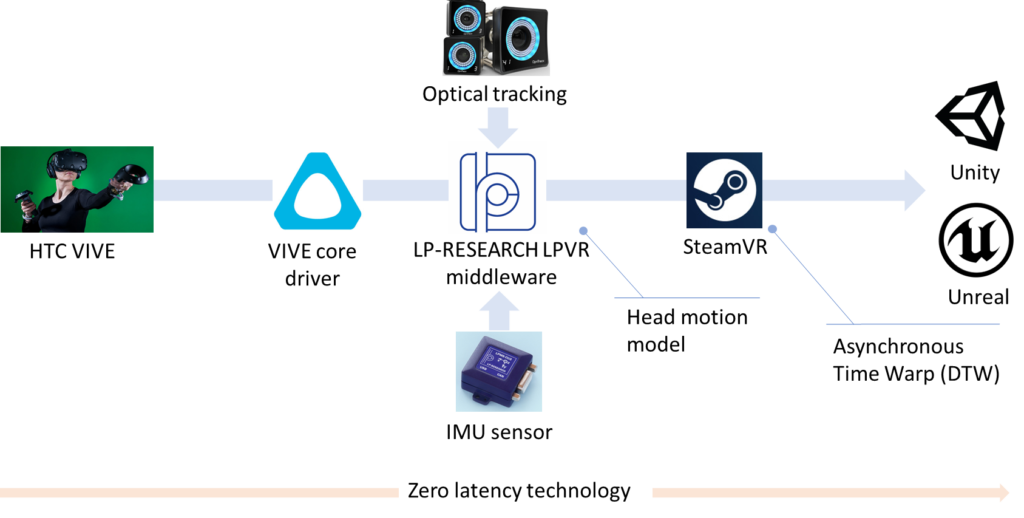

Current VR products cannot serve several important markets due to limitations of their tracking systems. Out of the box, both the HTC Vive and the Oculus Rift are limited to tracking areas smaller than 5m x 5m, which is too small for most multi-player applications. We have previously presented our solution that combines our motion sensing IMU technology, OptiTrack camera-based tracking and the HTC Vive to allow responsive multiplayer VR experiences over larger areas. Because of the necessary interfacing this means that applications still need to be prepared specifically for this solution.

We have now further improved our software stack such that we can provide a SteamVR driver for our solution. What this means is that on the one hand any existing SteamVR application automatically supports the arbitrarily large tracking areas covered by the OptiTrack system. On the other hand it means that no additional plugins for Unity, Unreal or your development platform of choice is needed — support is automatic. Responsive behavior is guaranteed by using LP-RESEARCH’s IMU technology in combination with standard low-latency VR technologies like asynchronous time warping, late latching etc. An overview of the functionality of the system is shown in the image above.