LPNAV for Flexible, Rapid AGV Deployment

LPNAV enables automatic guided vehicles (AGV) to rapidly understand their environment and be ready for safe and efficient operation; no calibration, manual map building etc. is required.

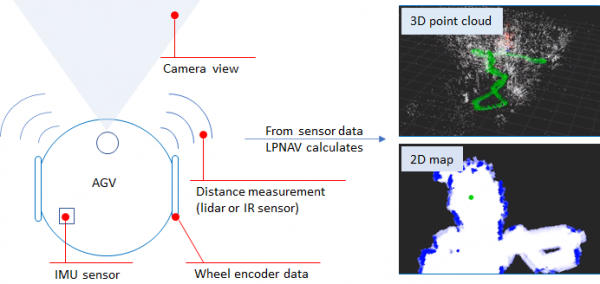

With the help of LPNAV, mobile logistics platforms can operate (localize) in both, indoor and outdoor environments with the same set of sensors (Figure 1) and a unified map, e.g. when transporting an item from inside a warehouse to a truck parked in front of the warehouse. This offers a big cost-saving potential for applications in which so far the transition from indoor to outdoor settings required specialized equipment or manual handling.

Outdoor Localization

In a previous post we have shown the capability of LPNAV to operate in a small, crowded indoor environment. After further optimization of the algorithm we are now able to show the system working well in outdoor settings. Uncontrolled outdoor environments are particularly challenging as lighting conditions can vary very strongly and perception can be disturbed by pedestrians, passing cars etc.

In the video above we show the following capabilities of the system:

- LPNAV is able to build a 3D map of its environment and localizes itself in real-time relative to its starting position.

- Previously acquired map data can be used for localization. The map is automatically updated to environment changes.

- When manually placed at a deliberate location on the map, an LPNAV-powered robot can instantly re-localize.

- The system is robust to camera occlusions. Sensor fusion with IMU and odometry allows temporary operation without visual features.

Figure 1 – LPNAV combines information from visual SLAM (camera), inertial measurement unit (IMU), distance sensors (lidar, IR) and wheel encoder data to calculate low-latency, high-accuracy localization results. The robot maps its environment to both, a 3D point cloud map and a human-readable 2D obstacle map.

2D Real-time Map Building

The 3D point-cloud maps built by LPNAV’s visual SLAM work well for computational localization of the robot inside its environment, but they are hard to intuitively understand by humans. Therefore we added a feature to LPNAV that allows building 2D maps of the robot environment based on information from its IR distance sensors. To achieve 2D wall / obstacle mapping at larger distances, alternatively to the IR sensors a 2D Lidar can be used.

The 2D real-time mapping capability is demonstrated in the video below: