LPVR-DUO Featured at Unity for Industry Japan Conference

Unity for Industry Conference – XRは次のステージへ

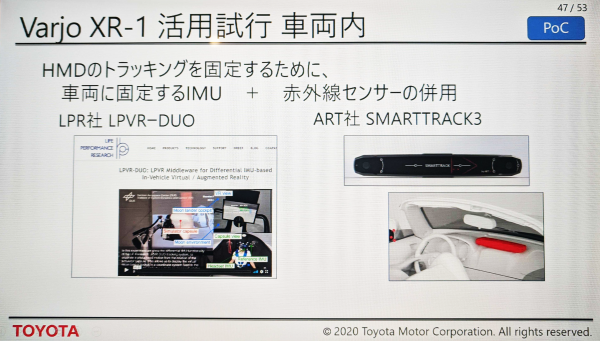

LPVR-DUO has been featured at the Unity for Industry online conference in Japan. TOYOTA project manager Koichi Kayano introduced LPVR-DUO with Varjo XR-1 and ART Smarttrack 3 for in-car augmented reality (see the slide above).

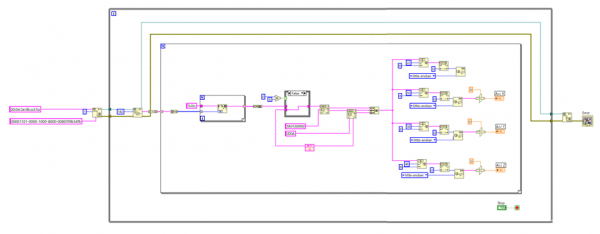

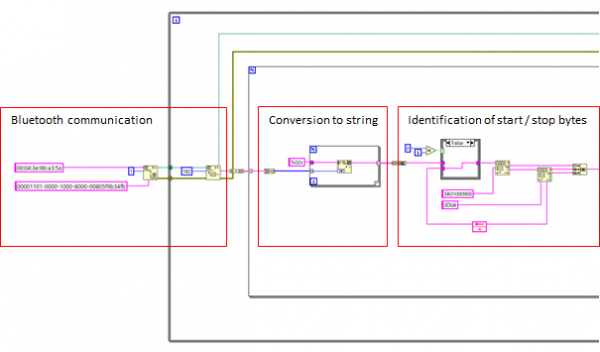

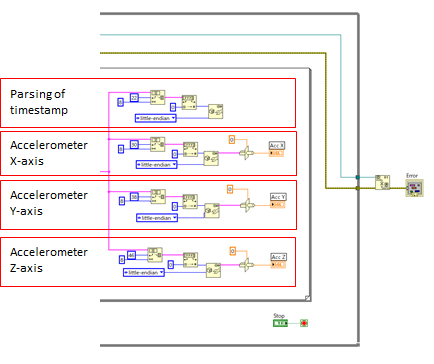

Besides explaining the fundamental functional principle of LPVR-DUO inside a moving vehicle – using a fusion of HMD IMU data, vehicle-fixed inertial measurements and outside-in optical tracking information – Mr. Kayano presented videos of content for a potential end-user application:

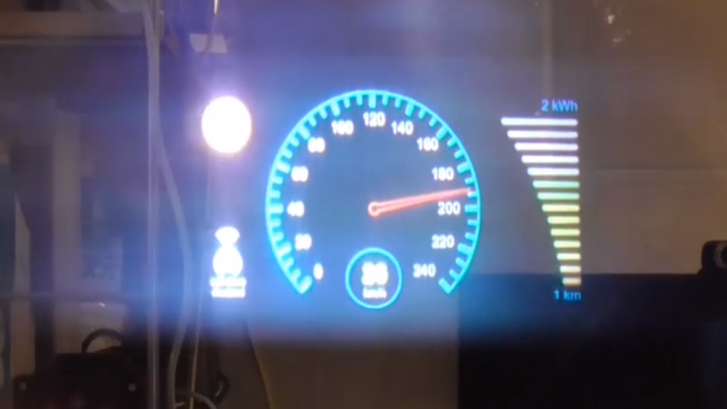

Based on a heads-up display-like visualization, TOYOTA’s implementation shows navigation and speed information to the driver. The images below show two driving situations with a virtual dashboard augmentation overlay.

AR Head-Mounted Display vs. Heads-Up Display

This use-case leads us to a discussions of the differences between an HMD-based visualization solution and a heads-up display (HUD) that is e.g. fixed stationary to the top of a car’s console. While putting on a head mounted display does require a minor additional effort by the driver, there are several advantages of using a wearable device in this scenario.

Content can be displayed at any location in the car, from projecting content onto the dashboard, the middle console, the side windows etc. A heads-up display works only in one specific spot.

As the HMD shows information separately to the left and right eye of the driver, we can display three-dimensional images. This allows for accurate placement of objects in 3D space. The correct positioning within the field of view of the driver is essential for safety relevant data. In case of a hazardous situation detected by a car’s sensor array the driver will know exactly where the danger is occurring from.

These are just two of many aspects that set HMD-based augmented reality apart from a heads-up display. The fact that large corporations like TOYOTA are starting to investigate this specific topic shows that the application of augmented reality in the car will be an important feature for the future of mobility.

NOTE: Image contents courtesy of TOYOTA Motor Corporation.