LPVR Middleware a Full Solution for AR / VR

Introducing LPVR Middleware

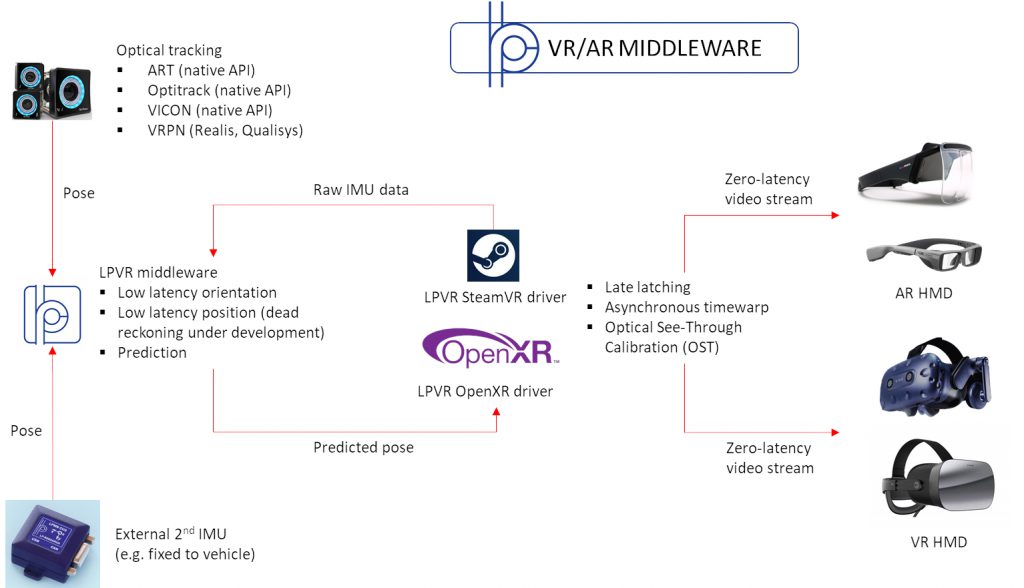

Building on the technology we developed for our IMU sensors and large scale VR tracking systems, we have created a full motion tracking and rendering pipeline for virtual reality (VR) and augmented reality (AR) applications.

The LPVR middleware is a full solution for AR / VR that enables headset manufacturers to easily create a state-of-the-art visualization pipeline customized to their product. Specifically our solution offers the following features:

-

- Flexible zero-latency tracking adaptable to any combination of IMU and optical tracking

- Rendering pipeline with motion prediction, late latching and asynchronous timewarp functionality

- Calibration algorithms for optical parameters (lens distortion, optical see-through calibration)

- Full integration in commonly used driver frameworks like OpenVR and OpenXR

- Specific algorithms and tools to enable VR / AR in vehicles (car, plane etc.) or motion simulators

Application of LPVR Middleware to In-Car VR / AR

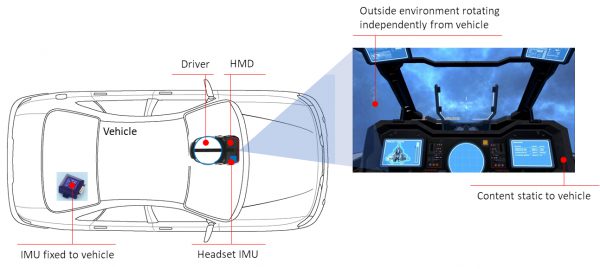

The tracking backend of the LPVR middleware solution for VR and AR is especially advanced in the aspect that it allows the flexible combination of multiple optical systems and inertial measurement units (IMUs) for combined position and orientation tracking. Specifically it enables the de-coupling of the head motion of a user and the motion of a vehicle the user might be riding in, such as a car or airplane.

As shown in the illustration below, in this way the interior of a vehicle can be displayed as static relative to the user, while the scenery in the environment of the vehicle moves with vehicle motion.

For any application of augmented reality or virtual reality application in a moving vehicle, this functionality is essential to provide an immersive experience to the user. LP-Research is the industry leader for providing customized sensor fusion solutions for augmented and virtual reality.

If you have interest in this solution, please contact us to start discussing your applications case.